One of the major challenges involved in gravitational wave data analysis is accurately predicting properties of the progenitor black hole and neutron star systems from data recorded by LIGO and Virgo. The faint gravitational wave signals are obscured against the instrumental and terrestrial noise.

LIGO and Virgo use data analysis techniques that aim to minimise this noise with software that can ‘gate’ the data – removing parts of the data which are corrupted by sharp noise features, called ‘glitches’. They also use methods that extract the pure gravitational-wave signal from noise altogether. However, these techniques are usually slow and computationally intensive; they’re also potentially detrimental to multi-messenger astronomy efforts, since observation of electromagnetic counterparts of binary neutron star mergers—like short-gamma ray bursts—relies heavily on fast and accurate predictions of the sky direction and masses of the sources.

In our recent study, we’ve developed a deep learning model that can extract pure gravitational wave signals from detector data at faster speeds, with similar accuracy to the best conventional techniques. As opposed to traditional programming, which uses a set of instructions (or code) to perform, deep learning algorithms generate predictions by identifying patterns in data. These algorithms are realised by ‘neural networks’ – models inspired by the neurons in our brain and are ‘trained’ to generate almost accurate predictions on data almost instantly.

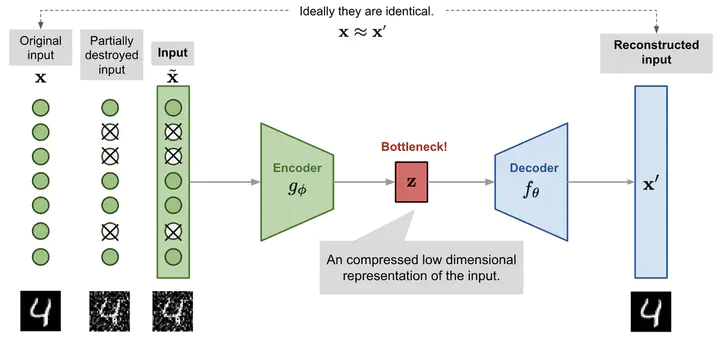

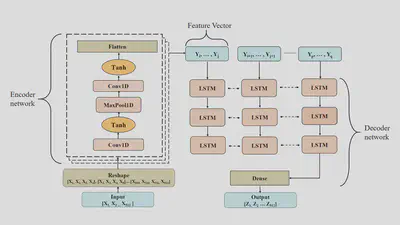

The deep learning architecture we designed, called a ‘denoising autoencoder’, consists of two separate neural networks: the Encoder and the Decoder. The Encoder reduces the size of the noisy input signals and generates a compressed representation, encapsulating essential features of the pure signal. The Decoder ‘learns’ to reconstruct the pure signal from the compressed feature representation. For the Encoder network, we’ve included a Convolutional Neural Network (CNN) which is widely used for image classification and computer vision tasks, so it’s efficient at extracting distinctive features from data. For the Decoder network, we used a Long Short-Term Memory (LSTM) network—it learns to make future predictions from past time-series data. Adiagram of our denoising autoencoder network is shown in Figure 1.

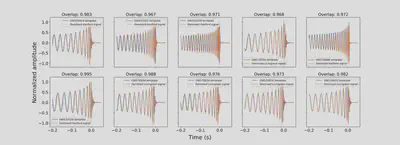

Our CNN-LSTM model architecture successfully extracts pure gravitational wave signals from detector data for all ten binary-black hole gravitational wave signals detected by LIGO-Virgo during the first and second observation runs (Figure 2).

It’s the first deep learning-based model to obtain > 97% match between extracted signals and ‘ground truth’ signal ‘templates’ for all these detected events. Proven to be much faster than current techniques, our model can accurately extract a single gravitational wave signal from noise in less than a milli-second (compared to a few seconds by other methods).

We are now using our CNN-LSTM model with other deep learning models to predict important gravitational wave source parameters, like the sky direction and ‘chirp mass’. We’re also working on generalising the model to accurately extract single signals from low-mass black hole binaries and neutron star binaries.

Paper status: Published in Physical Review D

![Real Time Localization of Gravitational Waves From Compact Binary Coalescences Using Deep Learning [Conference Talk]](/talk/real-time-localization-of-gravitational-waves-from-compact-binary-coalescences-using-deep-learning-conference-talk/featured_hu5c76e663b5dcb92d3a9a766cb5227ac1_381691_150x0_resize_q75_h2_lanczos_3.webp)

![Parameter Estimation of Gravitational Wave Sources Using Deep Learning [Invited Talk]](/talk/parameter-estimation-of-gravitational-wave-sources-using-deep-learning-invited-talk/featured_hu4ac047b5ea524975eaad4192f231006e_366318_150x0_resize_q75_h2_lanczos_3.webp)

![Denoising and Parameter Estimation of Gravitational Wave Events Using Deep Learning [Invited Talk]](/talk/denoising-and-parameter-estimation-of-gravitational-wave-events-using-deep-learning-invited-talk/featured_huc954fd6750b5457e9b00eb877b0ed535_589483_150x0_resize_q75_h2_lanczos_3.webp)

![How do we Detect and Localize Gravitational Waves in Real Time? [Invited Talk]](/talk/how-do-we-detect-and-localize-gravitational-waves-in-real-time-invited-talk/featured_hu55dacd5784f2218462f45fae378f8471_168187_150x0_resize_q75_h2_lanczos_3.webp)